AI in Video Production: 10 Applications and Benefits You Need to Know

Discover how AI transforms video production from scripting to editing, boosting creativity, efficiency, and cutting costs.

Integrate your CRM with other tools

Lorem ipsum dolor sit amet, consectetur adipiscing elit lobortis arcu enim urna adipiscing praesent velit viverra sit semper lorem eu cursus vel hendrerit elementum morbi curabitur etiam nibh justo, lorem aliquet donec sed sit mi dignissim at ante massa mattis.

- Neque sodales ut etiam sit amet nisl purus non tellus orci ac auctor

- Adipiscing elit ut aliquam purus sit amet viverra suspendisse potenti

- Mauris commodo quis imperdiet massa tincidunt nunc pulvinar

- Adipiscing elit ut aliquam purus sit amet viverra suspendisse potenti

How to connect your integrations to your CRM platform?

Vitae congue eu consequat ac felis placerat vestibulum lectus mauris ultrices cursus sit amet dictum sit amet justo donec enim diam porttitor lacus luctus accumsan tortor posuere praesent tristique magna sit amet purus gravida quis blandit turpis.

Techbit is the next-gen CRM platform designed for modern sales teams

At risus viverra adipiscing at in tellus integer feugiat nisl pretium fusce id velit ut tortor sagittis orci a scelerisque purus semper eget at lectus urna duis convallis. porta nibh venenatis cras sed felis eget neque laoreet suspendisse interdum consectetur libero id faucibus nisl donec pretium vulputate sapien nec sagittis aliquam nunc lobortis mattis aliquam faucibus purus in.

- Neque sodales ut etiam sit amet nisl purus non tellus orci ac auctor

- Adipiscing elit ut aliquam purus sit amet viverra suspendisse potenti venenatis

- Mauris commodo quis imperdiet massa at in tincidunt nunc pulvinar

- Adipiscing elit ut aliquam purus sit amet viverra suspendisse potenti consectetur

Why using the right CRM can make your team close more sales?

Nisi quis eleifend quam adipiscing vitae aliquet bibendum enim facilisis gravida neque. Velit euismod in pellentesque massa placerat volutpat lacus laoreet non curabitur gravida odio aenean sed adipiscing diam donec adipiscing tristique risus. amet est placerat.

“Nisi quis eleifend quam adipiscing vitae aliquet bibendum enim facilisis gravida neque velit euismod in pellentesque massa placerat.”

What other features would you like to see in our product?

Eget lorem dolor sed viverra ipsum nunc aliquet bibendum felis donec et odio pellentesque diam volutpat commodo sed egestas aliquam sem fringilla ut morbi tincidunt augue interdum velit euismod eu tincidunt tortor aliquam nulla facilisi aenean sed adipiscing diam donec adipiscing ut lectus arcu bibendum at varius vel pharetra nibh venenatis cras sed felis eget.

AI in Video Production

Artificial intelligence has completely changed the world of video production, making traditional processes more efficient and intelligent. Tasks that used to require a lot of manual work and expertise in different areas can now be improved or even fully automated with AI-powered tools and platforms.

AI is transforming the way creative teams work on videos, from coming up with ideas to delivering the final product.

This technology solves major problems that production teams have faced for a long time: tight deadlines, limited budgets, repetitive tasks, and the increasing need for large amounts of video content online. Now, AI video creators and specialized algorithms can take care of time-consuming jobs like editing, color correction, transcription, and even parts of scriptwriting. This shift allows human talent to focus on important creative decisions instead of technical tasks.

AI in video production offers clear benefits in three main areas:

- Cost reduction: Automating tasks that require a lot of manual work

- Improved efficiency: Speeding up production timelines from weeks to days

- Enhanced creativity: Providing smart suggestions and opening up new creative possibilities

The use of AI in video production services is an evolution that enhances human expertise rather than replacing it. Studios and creative teams that understand how to use these tools effectively will have an edge over their competitors in terms of speed, scale, and consistent quality.

In this article, we will explore ten specific ways in which AI adds real value to modern video workflows.

1. Concept Development and Scriptwriting Automation

AI scriptwriting tools have fundamentally changed the way videos are planned and written. These platforms use advanced algorithms to analyze large amounts of data, including successful scripts, storytelling techniques, and audience preferences. By doing so, they can create content that perfectly matches specific creative requirements and brand standards.

Jasper.ai is a prime example of this change. It offers a wide range of customizable templates for generating scripts that can be tailored to various video formats such as short social media clips or in-depth documentaries. The platform takes into account important factors like the target audience's characteristics, the desired tone of voice, key messages to be conveyed, and the overall length of the video in order to generate well-structured scripts complete with dialogue, scene descriptions, and timing recommendations.

The practical benefits go beyond just saving time:

- Faster revisions: Instead of spending days or weeks coming up with different versions of a script, creative teams can now generate multiple options within minutes. This flexibility allows them to experiment with different storytelling techniques before committing resources.

- Consistency in branding: AI tools ensure that brand voice guidelines are consistently applied across all script outputs. As a result, there is less need for extensive revision cycles and more efficient production processes.

- Creativity backed by data: Scripts generated by these tools not only incorporate proven techniques for engaging audiences but also maintain originality. This balance between creative risk-taking and meeting audience expectations is crucial for successful video content.

AI video editors and platforms like Adobe Premiere Pro are increasingly incorporating these scriptwriting features directly into their production workflows. This integration streamlines the process by allowing teams to smoothly transition from brainstorming ideas to planning out the actual production stages. For instance, scripts can be automatically formatted for teleprompters (devices used by presenters), shot lists (lists of all the shots needed), and editing timelines (schedules for when each scene will be edited). The technology takes care of complex structural tasks such as determining when acts should break, calculating pacing (the speed at which events unfold), and optimizing word counts—all of which gives writers more freedom to focus on adding subtle details and evoking emotions through their work.

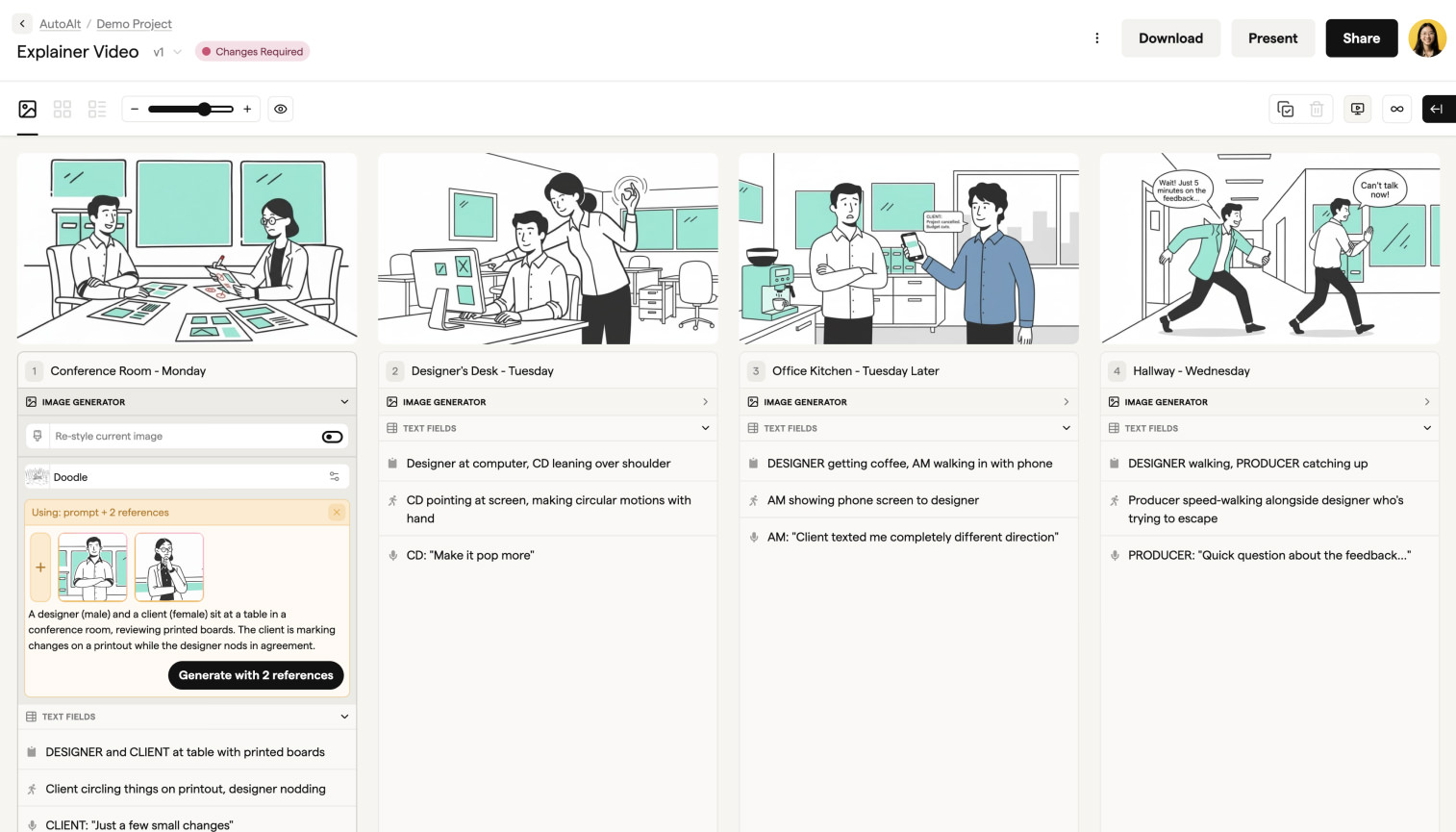

2. Storyboarding and Shot Planning with AI-generated References

Storyboarding is an essential part of any video project. It takes the ideas from the script and turns them into a series of images that guide everyone involved in making the video. In the past, storyboarding required talented artists and a lot of time, which often caused delays in the planning stage. But now, with AI storyboarding software, these challenges are being overcome.

How AI Storyboarding Works

AI storyboarding software uses artificial intelligence to create visual references based on descriptions from the script. This means that directors and cinematographers can see what each scene will look like before they start filming.

There are several tools available that can generate these reference images, such as Midjourney, DALL-E, and Stable Diffusion. These tools allow production teams to input specific details about each scene, including descriptions of the characters and the overall mood they want to convey.

Benefits of AI Storyboarding

The use of AI-generated storyboards offers several advantages over traditional methods:

- Speed: With AI storyboarding, directors can generate multiple visual interpretations of a single scene within minutes. This allows them to explore different options for camera angles, lighting setups, and composition techniques without having to rely on an illustrator or wait days for revisions.

- Flexibility: Production teams can make quick adjustments based on feedback by refining their prompts instead of requesting complete redrawing of frames. This flexibility is especially valuable when working remotely with distributed teams who need shared visual references to stay aligned creatively throughout the production process.

- Cost-effectiveness: By reducing reliance on external illustrators or artists, AI storyboarding has the potential to save costs associated with hiring additional resources for every revision cycle.

Practical Applications of AI Storyboarding

Here are some practical ways in which production teams can benefit from using AI-generated storyboards:

- Location scouting visualization: Generate reference images of desired environments when physical locations haven't been secured yet

- Character blocking: Create visual guides showing actor positioning and movement within frame

- Lighting design: Produce references demonstrating specific lighting moods and color temperatures

- Camera angle exploration: Test various shot compositions before committing to equipment and crew resources

By leveraging these applications, production teams can enhance their planning processes, improve communication among team members, and ultimately create more compelling videos that resonate with their target audience. Furthermore, as we continue to embrace technology in filmmaking, it's important to find a balance between innovation and artistry—something that AI in cinematography is helping us achieve. Additionally, understanding the broader impact of AI on film production can provide valuable insights for future projects.

3. Filming Assistance Including Casting/Location Suggestions Using Script Analysis

AI filming tools now extend beyond post-production into the production phase itself, analyzing scripts to recommend optimal casting choices and locations before cameras roll. Natural language processing algorithms parse screenplays to identify character attributes, emotional tones, and environmental requirements that inform pre-production decisions.

How AI Helps with Casting

Script analysis platforms evaluate dialogue patterns, character descriptions, and narrative context to suggest actors whose previous work aligns with the role's demands. These systems cross-reference casting databases with performance metrics, genre compatibility, and availability data. The technology identifies subtle requirements—a character's regional accent, physical characteristics, or emotional range—that might otherwise require extensive manual research.

How AI Helps with Location Scouting

Location scouting receives similar treatment through AI-powered geographic analysis. By extracting setting descriptions and scene requirements from scripts, these tools match production needs with location databases containing thousands of venues. The systems consider practical factors:

- Logistical accessibility for crew and equipment

- Lighting conditions based on shooting schedule requirements

- Acoustic properties for dialogue-heavy scenes

- Permit complexity and regulatory considerations

- Budget alignment with production financial parameters

This computational approach to AI in Video Production: Applications and Benefits reduces pre-production timelines from weeks to days. Production teams receive ranked recommendations with supporting data—reference images, cost estimates, and logistical assessments—enabling faster, more informed decisions that align creative vision with practical constraints.

4. On-set Technical Support: Real-time Focus/Exposure Adjustment, Object Tracking

AI on-set support tools are changing the way filming is done by automating important camera functions that usually need constant manual adjustment. These systems look at the incoming footage as it's being recorded and make immediate corrections to keep the image quality at its best throughout the entire shoot.

Autofocus systems powered by machine learning

Autofocus systems powered by machine learning track subjects with precision that surpasses conventional phase-detection methods. Canon's Dual Pixel CMOS AF II and Sony's Real-time Tracking use neural networks trained on millions of images to recognize and follow faces, eyes, and bodies—even when subjects turn away or move erratically. This capability proves essential for documentary work, run-and-gun productions, and any scenario where manual focus pulling becomes impractical.

Exposure adjustment algorithms

Exposure adjustment algorithms continuously monitor scene brightness and adjust settings to prevent blown highlights or crushed shadows. Systems like DJI's ActiveTrack and Blackmagic's Generation 5 Color Science evaluate histogram data across multiple zones, compensating for sudden lighting changes without the characteristic "breathing" effect of older auto-exposure implementations.

Object tracking extends beyond focus

Object tracking extends beyond focus to include gimbal stabilization and framing assistance. AI-driven gimbals from DJI and Zhiyun predict subject movement patterns, adjusting pan and tilt motors preemptively rather than reactively. This predictive capability reduces jerky corrections and maintains smooth, professional-looking footage even when operators lack extensive experience with stabilization equipment.

5. Automated Video Editing and Scene Assembly (e.g., Adobe Sensei Features)

AI video editing software is changing the game in post-production. It works by analyzing video footage, spotting important moments, and putting together scenes using editing techniques it has learned from professionals. This means that instead of spending days manually arranging clips on a timeline, editors can now do it in just a few hours while still keeping the story intact.

How Adobe Sensei is Leading the Way

Adobe Sensei is a great example of this new approach with its intelligent automation features:

- Auto Reframe: This feature looks at where the subject is positioned and how it moves in each frame. It then automatically adjusts the aspect ratios for different social media formats without needing any manual adjustments.

- Scene Edit Detection: This tool identifies where cuts happen in existing footage and separates clips at those points. This is especially useful when working with older material or raw camera files that don't have any metadata.

- Content-Aware Fill: If there's an unwanted object in a video frame, like a boom microphone that accidentally appears, this feature can remove it by analyzing the surrounding pixels and data from previous and future frames.

These features are designed to help editors save time on repetitive tasks so they can focus more on being creative and making decisions about storytelling. Instead of doing everything themselves, they can now use AI to handle rough cuts while they fine-tune things like pacing, emotional beats, and the overall structure of the narrative.

6. Color Grading, VFX Enhancement, and Animations Powered by Colourlab AI and Runway ML

Visual aesthetics define the emotional tone and professional quality of video content. AI color grading tools and AI VFX software now handle complex visual treatments that previously required specialized expertise and hours of manual adjustment.

Colourlab AI: Revolutionizing Color Grading

Colourlab AI transforms color grading workflows by analyzing footage and applying cinematic looks automatically. The platform learns from professional colorist decisions, enabling it to:

- Match color palettes across multiple clips instantly

- Preserve skin tones while adjusting ambient lighting

- Apply film emulation styles with scene-aware intelligence

- Generate consistent grades across multi-camera productions

Runway ML: Empowering Visual Effects and Motion Graphics

Runway ML extends AI capabilities into visual effects and motion graphics. This platform enables creators to AI generate videos with sophisticated effects through:

- Background removal and replacement without green screens

- Object detection and tracking for compositing work

- Style transfer that applies artistic treatments to footage

- Motion tracking for seamless VFX integration

The technical advantage lies in machine learning models trained on thousands of professional projects. These systems recognize patterns in lighting conditions, shot composition, and visual continuity. A colorist who might spend 30 minutes per scene can now achieve similar results in under five minutes. VFX artists bypass tedious rotoscoping tasks, focusing instead on creative decisions that elevate the final product.

7. Audio Editing and Voiceovers Using ElevenLabs/Murf/WellSaid Labs

Sound design is a crucial part of professional video production, and AI audio editing software has completely changed how creators work in this area. These modern platforms use machine learning to automate tasks like reducing noise, balancing audio levels, and removing unwanted sounds—tasks that used to require specialized skills and hours of manual work.

Voice Synthesis Technology

Voice synthesis technology has become so advanced that it poses a challenge to traditional voiceover workflows. ElevenLabs offers speech that sounds natural with emotional depth and tonal variation, enabling producers to create professional narration in multiple languages without the need for studio bookings. The platform's ability to clone voices ensures a consistent narrator presence across long-form content series, preserving brand voice while minimizing reliance on talent availability.

Specialized Text-to-Speech Solutions

Murf focuses on converting text into speech with studio-quality output, providing a wide range of voices categorized by age, accent, and delivery style. This level of control allows creative teams to precisely match voice characteristics to project requirements. On the other hand, WellSaid Labs caters to enterprise applications by offering voices trained on professional voice actors with licensing agreements that address commercial use concerns.

Benefits of AI-Powered Audio Tools

The practical benefits go beyond just convenience:

- AI-powered audio tools allow for quick changes during the editing phase—producers can experiment with different narration styles, adjust pacing, or adapt content for international markets without having to reshoot.

- Automated dialogue replacement becomes more efficient.

- Background music can be dynamically adjusted to fit speech patterns, resulting in polished soundscapes with less technical effort required.

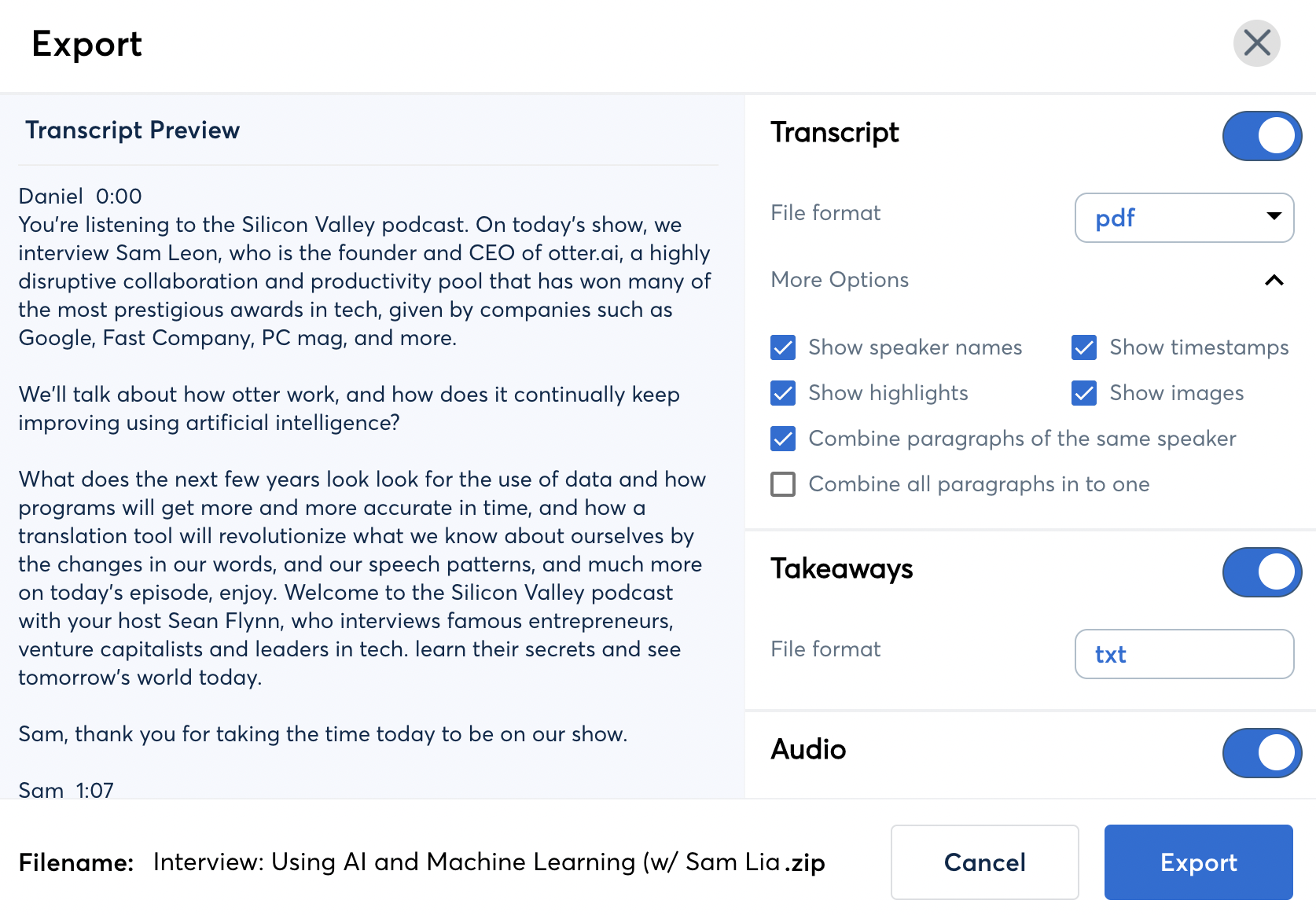

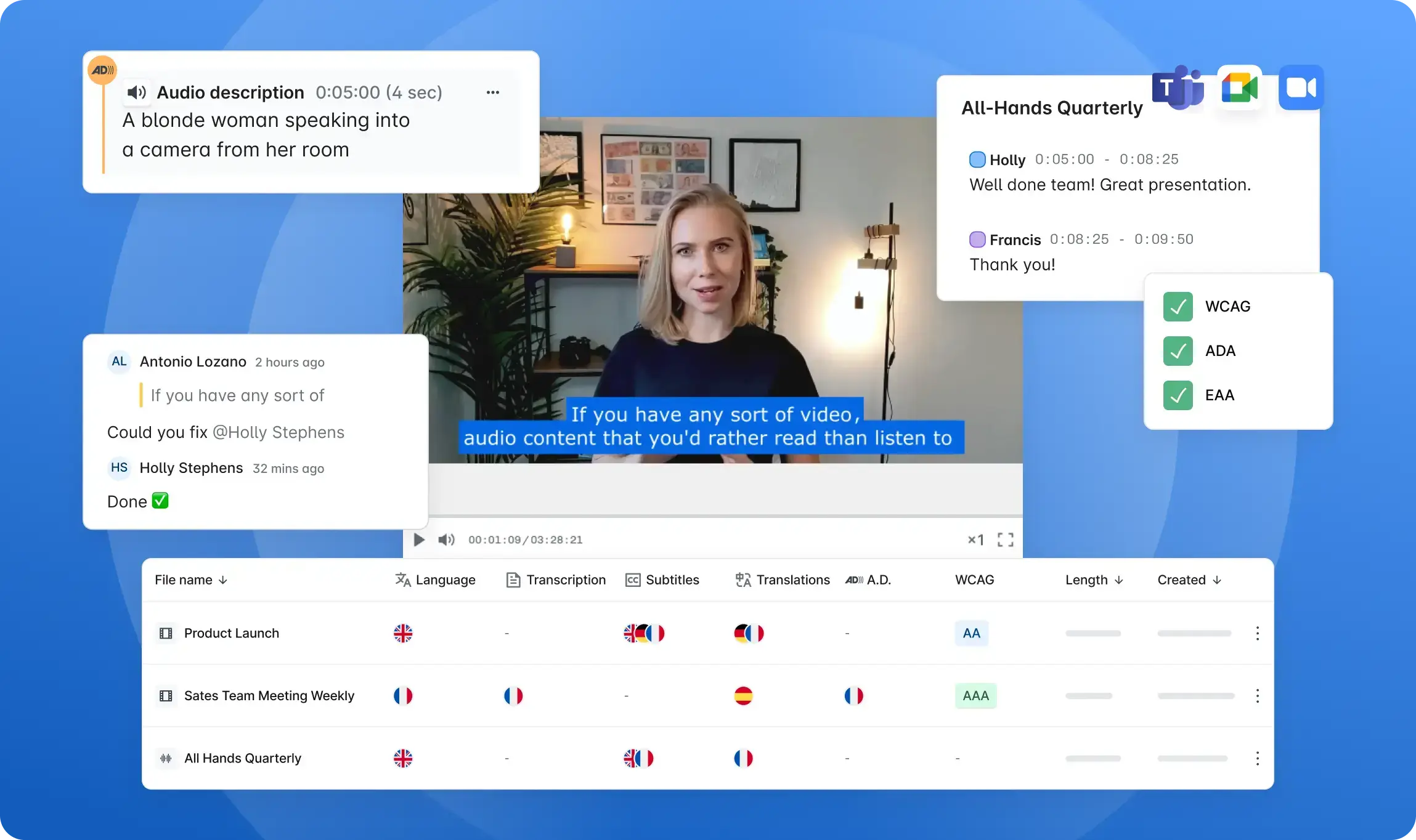

8. Transcription, Subtitling, Translation with Tools Like Subly and Otter.ai

AI transcription/subtitling software has fundamentally changed how production teams handle accessibility and global distribution. These platforms eliminate the manual labor traditionally required for creating accurate captions and multilingual versions of video content.

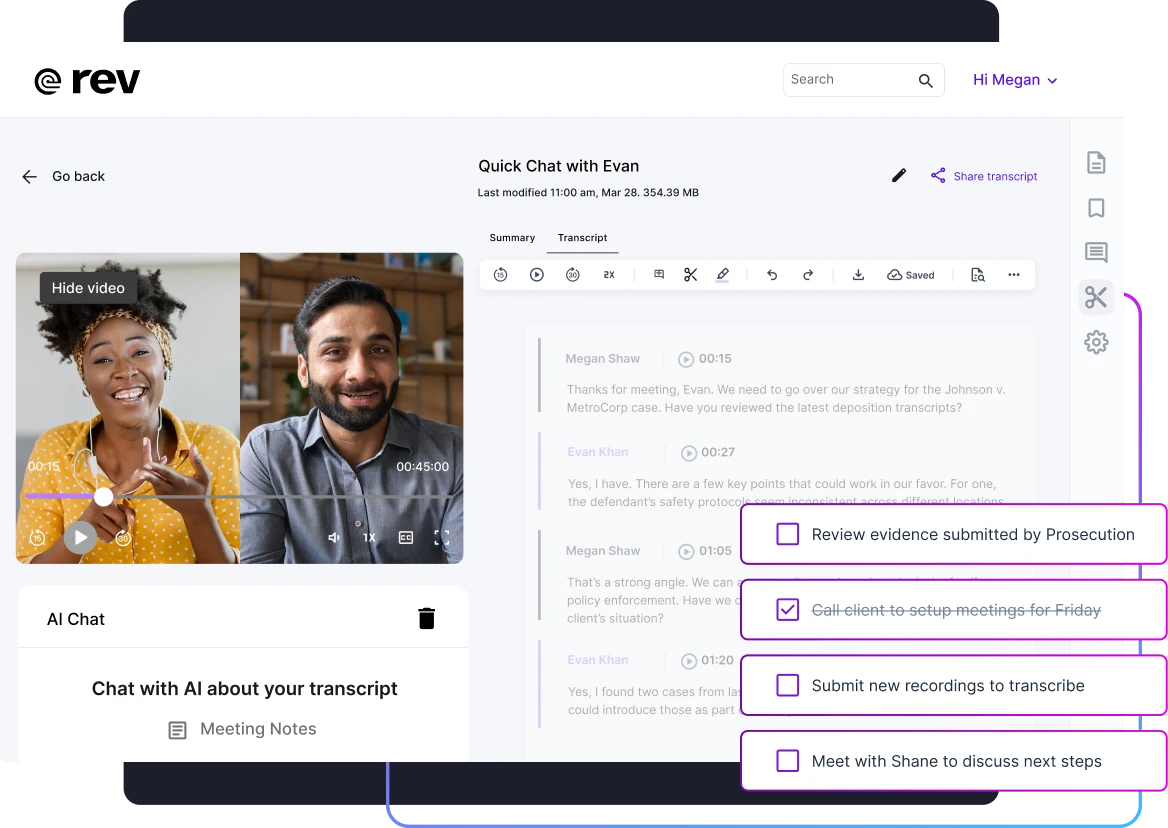

1. Otter.ai: Real-Time Transcription for Seamless Editing

Otter.ai processes spoken dialogue in real-time, generating timestamped transcripts that can be exported directly into editing software. The system identifies different speakers automatically and adapts to industry-specific terminology through machine learning. This capability proves particularly valuable during interviews, documentary production, and corporate video shoots where immediate transcript access streamlines the editing workflow.

2. Subly: Transcription, Subtitle Generation, and Translation Made Easy

Subly extends this functionality by combining transcription with automatic subtitle generation and translation into over 70 languages. The platform maintains proper timing synchronization while allowing editors to adjust positioning, styling, and formatting to match brand guidelines. The translation engine preserves context and idiomatic expressions rather than producing literal word-for-word conversions.

3. Rev.ai and Descript: Advanced Features for Enhanced Efficiency

Rev.ai and Descript offer similar capabilities with added features like automatic filler word removal and speaker diarization. These tools reduce subtitle creation time from hours to minutes while maintaining accuracy rates above 95% for clear audio recordings.

The business impact extends beyond time savings. Videos with captions receive 40% more views on average and perform significantly better in search rankings. Multilingual subtitle options expand market reach without requiring separate voice recording sessions or localized production shoots.

9. Content Personalization via Analytics-driven Recommendations

AI content personalization tools are changing the way audiences interact with video content. They do this by analyzing viewer behavior, preferences, and consumption patterns. Using machine learning algorithms, these tools can process large amounts of data such as watch time, click-through rates, device types, and demographic information. With this information, they can create intelligent recommendations that align with each individual viewer's interests.

Real-world Examples: YouTube and Netflix

Platforms like YouTube and Netflix are already using this technology on a large scale to personalize their content offerings. But now, production teams have the opportunity to incorporate similar technologies into their own distribution strategies.

How AI Enhances Video Production

AI is not only useful in creating videos but also in strategically distributing them. Tools like Vidooly and TubeBuddy utilize predictive analytics to provide recommendations on the best times to post videos, variations for thumbnails, and different content formats based on audience behavior patterns.

Some platforms even have the capability for dynamic video assembly. This means that the AI can choose different opening sequences, product demonstrations, or calls-to-action depending on who the viewer is.

The Benefits of Personalization

The main advantage of using AI for content personalization is the potential for better conversion rates and viewer retention.

For example:

- A marketing video could automatically highlight specific product features based on whether the viewer has previously shown interest in pricing information or technical specifications.

- Educational content could adjust its pacing and complexity depending on how far along the learner is in their progress.

Previously, achieving this level of customization would have required manually segmenting audiences and creating multiple versions of each video—a process that is resource-intensive and time-consuming. However, with AI technology, these tasks can now be automated efficiently.

10. Quality Control Through Machine Learning-powered Review Systems (Dalet Media Cortex)

AI quality control software has introduced systematic, objective evaluation methods that catch technical issues and compliance violations before content reaches distribution channels. Machine learning-powered review systems analyze video assets at scale, identifying problems that human reviewers might miss during manual inspection.

Dalet Media Cortex represents a production-grade approach to automated quality assurance. The platform examines multiple technical parameters simultaneously:

- Audio integrity checks detecting clipping, silence gaps, phase issues, and loudness standard violations

- Visual quality assessment flagging compression artifacts, color space errors, frame rate inconsistencies, and resolution mismatches

- Metadata validation ensuring accurate timecodes, closed caption synchronization, and format specifications

- Content compliance scanning identifying potential rights management issues, prohibited content, and regulatory requirements

The system processes files in parallel with editing workflows, generating detailed reports that pinpoint specific timecode locations where issues occur. This allows editors to address problems immediately rather than discovering them during final delivery checks.

For studios managing high-volume output across multiple platforms, these review systems maintain consistent quality standards without expanding QC teams. The technology learns from correction patterns, improving detection accuracy over time and adapting to specific organizational requirements. Automated quality control reduces the risk of costly re-edits, failed deliveries, and reputation damage from technical errors that reach audiences.

Key Benefits and Challenges of Integrating AI into Video Production Services

The integration of AI in video production represents a fundamental shift in how content is created, managed, and delivered. Understanding both the advantages and obstacles of this transformation enables production teams to make informed decisions about technology adoption and workflow redesign.

Benefits

1. Speed Acceleration Across the Production Pipeline

AI-driven workflows compress timelines that traditionally required weeks into days or even hours. Preproduction tasks like script analysis, location scouting research, and storyboard generation now happen in minutes rather than days. Post-production processes benefit even more dramatically—automated editing systems can assemble rough cuts from hours of footage in the time it takes to review the material once. Color grading that once demanded days of meticulous adjustment now completes in hours with AI-assisted tools maintaining consistency across thousands of shots. This faster turnaround for videos directly impacts project capacity, allowing studios to accept more work without proportional increases in staff or infrastructure.

2. Cost Reduction in Video Production Through Intelligent Automation

The financial implications of AI adoption extend beyond simple labor cost calculations. Automated transcription eliminates the need for dedicated transcription services, saving $1-3 per minute of content. AI-powered color grading reduces the hours senior colorists spend on routine adjustments, freeing them for creative decisions that genuinely require human expertise. Script development tools decrease the iterations needed before production begins, reducing pre-production costs by 20-40% in many cases. Smaller crews can accomplish what previously required larger teams—a single operator with AI-assisted camera systems can achieve focus, exposure, and framing quality that once demanded a dedicated focus puller and camera assistant.

3. Quality Consistency Through Intelligent Error Detection

Machine learning systems identify technical flaws that human reviewers might miss during compressed review cycles. Audio levels remain consistent across cuts, color temperatures match between shots filmed days apart, and visual artifacts get flagged before final delivery. This consistency proves particularly valuable for serialized content, brand videos, or any project requiring uniform visual standards across multiple episodes or installments. The efficiency improvement in filmmaking comes not just from speed but from reducing the rework cycles that plague traditional production workflows.

4. Scaling Capabilities for Lean Production Teams

AI tools democratize production capabilities previously available only to large studios with extensive resources. A three-person team equipped with AI-assisted editing, color grading, and audio processing can deliver polish comparable to productions with ten-person post teams. This scaling advantage proves especially valuable for agencies handling multiple client projects simultaneously or content creators managing high-volume output schedules. The technology doesn't replace creative judgment—it amplifies the impact of skilled professionals by handling technical execution and repetitive tasks.

5. Real-time Creative Enhancement During Production

On-set AI systems provide immediate feedback that improves creative decision-making during shoots rather than discovering issues in post-production. Directors can review AI-generated shot compositions, evaluate lighting setups with predictive rendering, and make informed decisions about coverage needs based on automated script analysis. This real-time capability reduces the "fix it in post" mentality that often leads to budget overruns and compromised creative vision.

Challenges

While the potential benefits are significant, there are also challenges associated with integrating AI into video production:

1. Initial Investment Costs

Implementing AI technologies often requires substantial upfront investments in software licenses, hardware upgrades, and training programs for staff members who will be using these new tools regularly.

2. Resistance to Change Within Teams

Introducing any new technology can lead to resistance from team members who may feel threatened by automation or uncertain about their roles

Challenges

The integration of AI into video production workflows introduces technical and ethical complexities that require careful consideration.

1. Authenticity concerns

The rise of deepfake technology and synthetic media has heightened worries about authenticity. While AI-generated faces and voices have legitimate uses in production, these same tools can be misused by malicious individuals to create deceptive content. To uphold audience trust, especially when AI is involved in generating or altering human likenesses, production teams must establish verification protocols and disclosure practices.

2. Consent and privacy frameworks

Technological advancements often outpace the development of consent and privacy regulations. Voice cloning platforms have the ability to mimic speech patterns using only a small amount of audio data, which raises concerns about the unauthorized use of performers' vocal signatures. Likewise, AI systems that are trained on actor appearances may produce synthetic performances without obtaining proper licensing agreements. It is crucial for studios to establish clear contractual language that addresses AI-generated derivatives and synthetic media rights in order to safeguard both talent and production companies from legal liabilities.

3. Intellectual property disputes

Disputes over intellectual property arise when AI models are trained on copyrighted materials such as videos, scripts, or music without obtaining explicit permission. There is a risk that the content generated by these models may unintentionally reproduce protected elements from the training datasets, leading to potential legal consequences. The application of fair use doctrine in relation to machine learning contexts is still uncertain. Production teams should keep records of their decision-making process regarding the selection of AI tools and ensure that vendors utilize ethically sourced training data.

4. Algorithmic bias

Biases present in training datasets can perpetuate gaps in representation and reinforce stereotypical portrayals. AI systems that are predominantly trained on Western media may struggle to understand diverse cultural contexts, skin tones, or linguistic variations. If not carefully monitored, automated casting suggestions or scene generation tools have the potential to amplify existing biases within the industry. Regular audits of AI outputs are essential in order to identify systematic distortions before they compromise the integrity of a project.

5. Human oversight

Despite advancements in automation, human oversight remains essential. While AI is proficient at recognizing patterns and performing repetitive tasks, it lacks the ability to make contextual judgments, understand cultural nuances, and possess creative intuition. It is imperative for experienced editors, directors, and producers to review content generated by AI in order to ensure coherence in storytelling, alignment with brand values, and adherence to ethical standards. The most effective workflows view AI as a tool that enhances the work of skilled professionals rather than replacing them altogether.

Real-World Examples Demonstrating How Companies Are Leveraging These Technologies Today

The practical application of AI in video production extends far beyond theoretical possibilities. Major studios, corporations, and independent creators are actively deploying these technologies to solve production challenges and achieve results that would be impossible through traditional methods.

1. Lucasfilm and Disney: Pushing the Boundaries of Visual Effects

Through their work on projects like The Mandalorian, Lucasfilm and Disney have pushed the boundaries of AI-assisted visual effects. The studio utilized AI-powered voice cloning technology to recreate the voice of a young Luke Skywalker, combining this with digital de-aging techniques to deliver a performance that maintained authenticity while overcoming the limitations of time. This approach demonstrates how AI can preserve legacy performances and enable storytelling that spans decades without compromising visual quality or narrative continuity.

2. Hour One and CoreLogic: Transforming Corporate Video Production

Hour One and CoreLogic represent the corporate video transformation happening through virtual presenter platforms. These companies deploy AI-generated avatars that deliver professional presentations, training materials, and marketing content without requiring on-camera talent or studio time. CoreLogic specifically uses this technology to produce consistent, multilingual property reports and educational videos at scale—a task that would traditionally require extensive resources and coordination across multiple production teams.

3. FOX Corporation: Revolutionizing Content Strategy with Audience Analytics

FOX Corporation partnered with AWS to implement AI-driven audience analytics that fundamentally changed their content strategy. The system processes massive datasets from viewing patterns, social media engagement, and demographic information to identify content preferences and optimize programming decisions. This intelligence allows FOX to deliver more targeted content that resonates with specific audience segments, improving both viewer retention and advertising effectiveness.

4. Coca-Cola: Driving Engagement through AI-Driven Advertising

Coca-Cola launched AI-driven advertising campaigns that generated significant engagement improvements over traditional creative approaches. The company used AI tools to analyze consumer sentiment, predict trending visual styles, and generate multiple creative variations for testing. One campaign utilized AI to create personalized video content that adapted messaging based on viewer demographics and viewing context, resulting in measurably higher engagement rates and brand recall.

5. Corridor Digital: Showcasing the Potential of AI-Assisted Animation

Corridor Digital, an independent production studio, created Anime Rock, Paper, Scissors—a short film that showcased the creative potential of AI-assisted animation. The project used AI tools to generate backgrounds, assist with character animation, and accelerate the production timeline from what would typically require months of work by large animation teams down to weeks with a small crew. The studio documented their process transparently, providing valuable insights into both the capabilities and current limitations of AI in creative production workflows.

Conclusion

The transformation of video production through artificial intelligence represents a fundamental shift in how creative content gets conceptualized, produced, and delivered. From automated scriptwriting and AI-assisted storyboarding to intelligent editing systems and machine learning-powered quality control, these technologies have moved beyond experimental phases into production-ready tools that deliver measurable results.

AI in Video Production: Applications and Benefits extend across every stage of the workflow. Pre-production planning accelerates through intelligent script analysis and reference generation. On-set operations gain precision with real-time technical adjustments and object tracking. Post-production timelines compress dramatically through automated editing, color grading, and audio enhancement. Distribution reaches broader audiences via instant transcription, translation, and personalized content recommendations.

The evidence from industry leaders—Disney's digital effects innovations, corporate platforms adopting virtual presenters, major broadcasters using AI for audience insights, and creative studios pushing boundaries with AI-assisted filmmaking—demonstrates that these applications deliver tangible competitive advantages. Organizations that integrate AI thoughtfully into their production pipelines achieve faster turnaround times, maintain consistent quality standards, and scale output without proportional cost increases.

Clixie AI brings hands-on expertise in deploying these cutting-edge tools across diverse video production scenarios. Our team understands the practical implementation of AI-driven workflows, from selecting appropriate platforms for specific creative challenges to integrating multiple AI systems into cohesive production pipelines. We've applied top-tier AI technologies—Adobe Sensei for editing efficiency, Runway ML for visual effects, ElevenLabs for voiceover production, and analytics platforms for content optimization—across projects spanning corporate communications, marketing campaigns, and creative storytelling.

The opportunity to leverage AI in video production continues expanding as tools mature and new capabilities emerge. Organizations ready to adopt these technologies gain significant advantages in production speed, creative flexibility, and market responsiveness.

Ready to transform your video production capabilities with AI? Connect with Clixie AI to explore how our expertise in AI-assisted filmmaking can deliver innovative, high-impact videos tailored to your specific objectives and audience requirements.

FAQs (Frequently Asked Questions)

What are the key applications of AI in modern video production?

AI is revolutionizing every stage of video production, including concept development and scriptwriting automation, storyboarding with AI-generated references, filming assistance through casting and location suggestions, on-set technical support like real-time focus and exposure adjustment, automated video editing and scene assembly, color grading and VFX enhancement using tools like Colourlab AI and Runway ML, audio editing and voiceovers with ElevenLabs or Murf, transcription and subtitling via Subly and Otter.ai, content personalization through analytics-driven recommendations, and quality control with machine learning-powered review systems such as Dalet Media Cortex.

How does AI improve efficiency and reduce costs in video production services?

AI significantly accelerates the entire video production workflow from preproduction planning to final edits by automating repetitive tasks such as scriptwriting, color grading, transcription, and editing. This reduces the need for large crews and shortens turnaround times. Additionally, intelligent automation enhances quality consistency across projects while enabling smaller teams to manage high-volume demands without compromising creativity or polish.

What challenges are associated with integrating AI into video production?

Key challenges include concerns about authenticity and trustworthiness due to risks like deepfakes, consent and privacy issues related to likeness usage and voice cloning technologies, intellectual property considerations when using copyrighted materials in training data or generated content, biases embedded in training datasets affecting representation and fairness, and the crucial need for human oversight to maintain accountability and preserve creative value throughout the production process.

Can you provide examples of companies successfully leveraging AI in their video productions?

Yes. Lucasfilm/Disney utilize AI for voice cloning and digital de-aging effects; Hour One/CoreLogic employ virtual presenter platforms transforming corporate videos; FOX Corporation leverages AWS AI for audience data analysis to enhance targeted content delivery; Coca-Cola has executed successful AI-driven advertising campaigns boosting engagement; Corridor Digital pioneered an anime short film created extensively with AI assistance.

How does AI assist during the filming phase of video production?

During filming, AI provides real-time technical support such as focus and exposure adjustments as well as object tracking to ensure smooth operations on set. It also analyzes scripts to offer casting suggestions and optimal location recommendations, thereby enhancing shooting efficiency and aiding creative decision-making.

What role does AI play in post-production processes like editing, color grading, and audio enhancement?

In post-production, AI automates video editing tasks including scene assembly through tools like Adobe Sensei. For visual aesthetics, AI-powered software such as Colourlab AI and Runway ML handle color grading, VFX enhancement, and animations. Additionally, AI improves audio quality by automating editing techniques and generating realistic voiceovers using platforms like ElevenLabs, Murf, or WellSaid Labs.

.png)